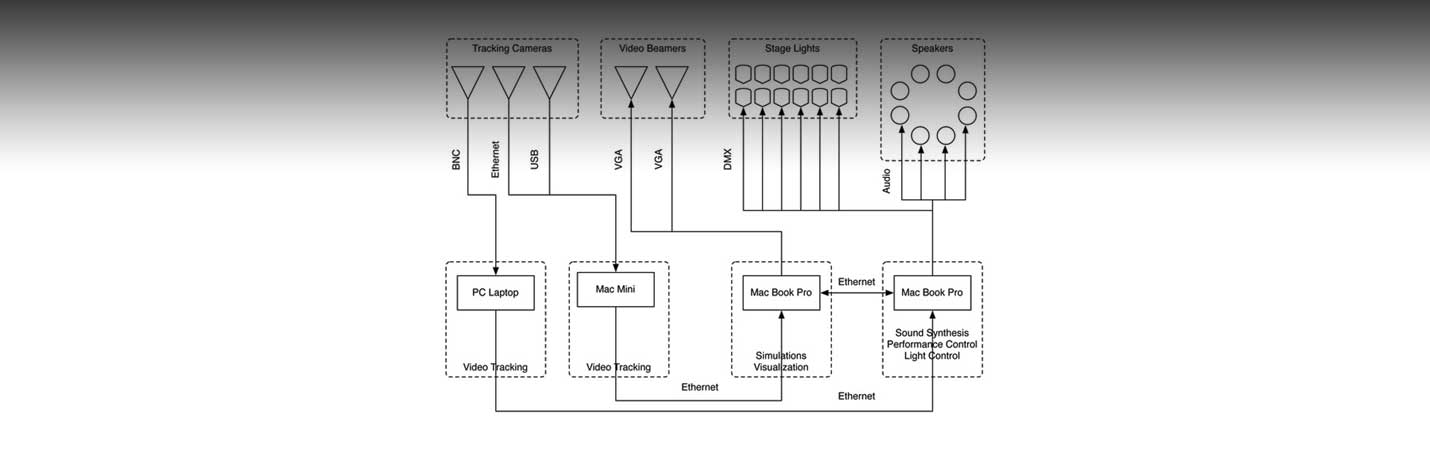

The software of Stocos is composed of several applications that exchange data using OSC protocol: A simulation software that that has been developed by Daniel Bisg for this piece model the movements of large groups of simple entities in space, in particular, the brownian movement of microscopic particles and the coherent movement of flocking animals. The implementation of these simulations is based on a C++ simulation library that has been developed by one of the authors as part of a research Project about swarm based music and art [4][5]. One of the main benefits of this simulation library is its ability to enable the creation of highly customized swarm simulations that can be extended and modified during runtime. These simulations can easily interact with other software due to their OSC based control and communication mechanisms.

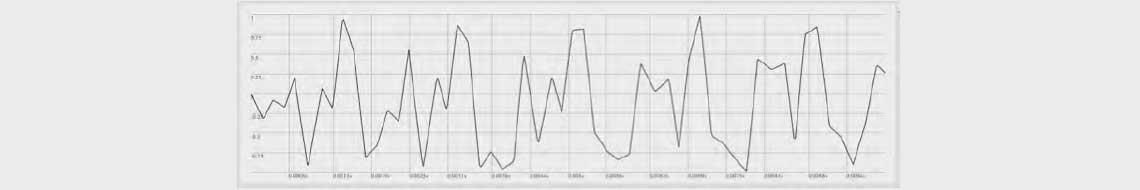

A sound synthesis software that employs simulated brownian movements as a stochastic mechanism to modify individual digital samples and thereby directly manipulate the sound pressure curve of an audio waveform. According to this method, the waveform is polygonized via a number of breakpoints (see figure). Each of these breakpoints is constantly perturbed by two random walks that control the amplitude and duration of the waveform. The values generated by the random walks are delimited by so called mirror barriers. For Stocos, the method of Dynamic Stochastic Synthesis has been implemented in the Supercollider programming environment by Pablo Palacio. On an algorithmic level, the constraints on the simulated brownian movements that give rise to the synthesized sounds are modified by the activity of the simulated agents.

Figure 10: Dynamic Stochastic Synthesis. Time domain plot of a waveform’s that has been created via Dynamic Stochastic Synthesis.

A tracking software has been custom developed in C++ by Daniel Bisig that provides more extensive albeit slower tracking information about the dancers’ positions, postures, contours and movements (see figure 9). This software acquires a video image from a ceiling mounted digital camera and a distance image by a Kinect camera that is located in front of the stage. The Trucking data is sent to the simulation software. Tracked movement and position information controls the creation, location and speed of simulated agents that are hidden to the audience but that can be perceived by the other agents. Tracked contour information serves to manipulate spatial structures within the simulation space. Many of the swarms’ behaviors that have been specifically developed for the performance deal with perceiving and responding to these tracking based spatial structures.

Tracking Software. A custom developed tracking software detects thedancers’ positions (grey bounding boxes), postures (yellow direction lines), contours(grey silhouettes) and movements (red circles and gradients).

For those sections of the performance during which the dancer’s movements directly affect the life generated synthetic sounds, an analogue video camera in conjunction with the Eyecon video tracking software and the Supercollider programming environment [3] is used to provide a low latency movement detection.

Tracking Regions. The stage is divided into several tracking regions that are associated to different sounds.